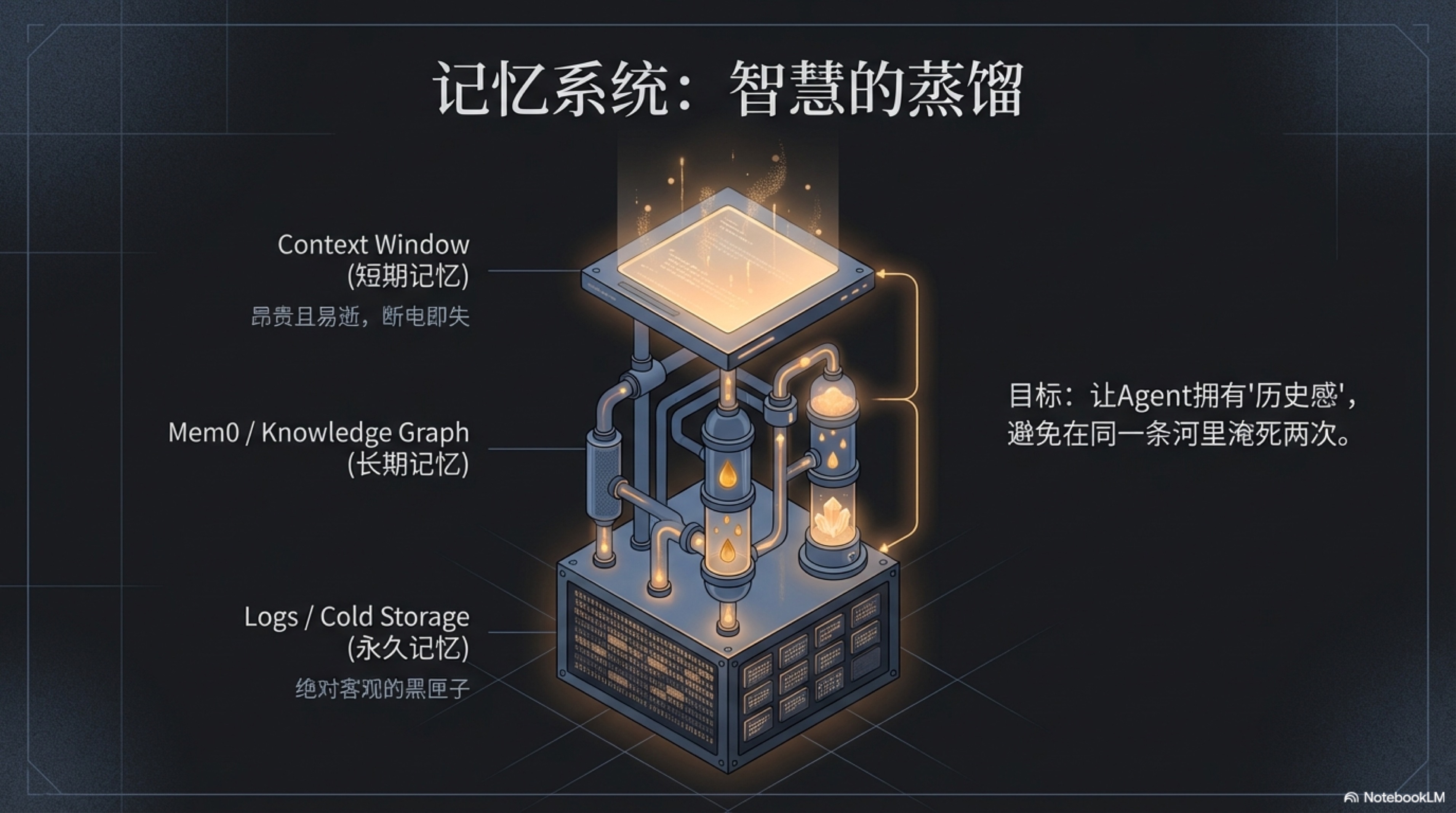

3.2 Memory System

The ReAct paradigm we explored in the previous section endows silicon-based employees with the ability to "think while walking" in the fog. But this immediately leads to a deeper and more fatal question:

How does a genius who "forgets as soon as they think" not drown in the same river twice?

If every task is a brand new adventure, and every decision cannot draw the slightest wisdom from history, then even with powerful instantaneous reasoning capabilities, this agent is essentially still a "dice machine" armed by ReAct. Its actions, whether successful or failed, leave no trace like the wind, unable to provide any enlightenment for the next action.

To transform this fleeting "inspiration" into accumulable "wisdom," we must install a real brain for this new species. The core of this brain is its memory system—a carefully designed multi-layer structure mimicking biological intelligence. It is responsible for distilling and refining chaotic raw experiences into precious knowledge that guides the future.

Short-term Memory (Context) — The Fleeting Workbench

First is the Short-term Memory of the silicon-based employee. This is the famous "Context Window" of large language models.

It is like the temporary "workbench" when we think, or the high-speed RAM in a computer. When you issue instructions, provide examples, or have multiple rounds of conversation with it, all relevant immediate information is spread out on this table for it to quickly grab and process. The advantage of this table is extreme efficiency and speed, ensuring the fluency of dialogue and the coherence of tasks.

But its disadvantage is also fatal: Lost on Power Off.

Once the session ends or the API call is completed, this "workbench" is instantly cleared, and all precious context—the prompts you carefully designed, the subtle differences it just realized—will vanish into thin air. It's like you hired a talented consultant at a high price; every conversation with him sparks inspiration, and he can always precisely grasp your intentions. But as soon as he walks out of the room, he forgets everything you just talked about. In the next meeting, you have to start from "Hello, who am I" and repeat all the background information verbatim.

For an AI-native enterprise pursuing efficiency and automation, this "periodic amnesia" is unacceptable. Therefore, we need a completely opposite mechanism to compensate.

Permanent Memory (Cold Storage/Logs) — The Tamper-proof Black Box

Opposite to the fleeting workbench is the Permanent Memory of the silicon-based employee. Its form is not refined knowledge, but an all-encompassing, tamper-proof, millisecond-precise Audit Log.

Imagine the "black box" on an airplane. No matter what happens, it faithfully records every operation, every instruction, and every interaction with the external world. The AI Agent's permanent memory is exactly such a digital black box. It carries no bias or omission, fully recording every thing done, every decision made, every API call, every success and failure since the Agent's birth.

The core value of this memory does not lie in allowing the Agent to directly call it to "recall" something—because it is full of massive, unprocessed raw data, and reading it directly is like looking for a needle in a haystack. Its true value is to provide us—system architects and auditors—with an absolutely objective, traceable Single Source of Truth.

When the system has a serious error and we need to review the root cause; when an Agent's behavior deviates from expectations and we need to analyze its decision chain; or even in the future, when our AI enterprise faces legal compliance reviews and needs to prove that a decision was not malicious—this "black box" will be our most powerful, and perhaps only, basis.

It is not directly responsible for making the Agent "smarter"; it is responsible for ensuring that the Agent's actions are always under our supervision, making this powerful digital employee always a transparent, controllable, and accountable existence.

Long-term Memory — The Cornerstone of Wisdom and Knowledge Distillation Factory

Okay, now we have an "instant workbench" and an "eternal black box." But true wisdom is neither fleeting inspiration nor chaotic accounts. Wisdom is the digestion, absorption, and refinement of experience.

This is the mission of Long-term Memory, and also the core of building a truly "learning" agent.

We must be clear that the RAG (Retrieval-Augmented Generation) solution based on vector databases, which was popular a few years ago, cannot fully competent for this role. It is more like a library piled with raw materials. Although it can find relevant books (Documents) based on your question (Query), it cannot read, understand, and summarize these books itself. If you ask it "Why did it fail last time?", it might throw a ten-thousand-word error log at you exactly as it is, leaving you to read it yourself.

The goal of a true long-term memory system is not to store information, but to distill wisdom. The new generation "Universal Memory Layer" represented by the open-source project Mem0 reveals this possibility[^1][^2][^3]. Like a tireless assistant, it follows a "Extract-Associate-Integrate" three-step process far more precise than RAG, transforming raw experience into usable knowledge:

Extract structured "Core Facts": It first proactively and continuously reads the all-encompassing but incredibly complex流水账 of "Permanent Memory," and extracts structured "Core Facts" from it. For example, it can precisely extract a key piece of information from a thirty-minute meeting recording log:

"Decision: Project 'Alpha' deadline adjusted from August 1 to September 15, due to delays from upstream API supplier."Establish traceable "Memory Index": Every extracted "Core Fact" will not become a castle in the air. It always carries a precise index pointing to its original source in "Permanent Memory" (the specific timestamp of that thirty-minute recording log). This gives the system a powerful "Drill Down" capability: we can quickly locate key information by retrieving "Core Facts," and instantly return to the most original and complete context to verify details when necessary (for example, wanting to know who proposed the extension at the meeting).

Execute "Non-destructive" "Smart Integration": This is the most critical step. When a new "Core Fact" is extracted,

Mem0compares it with its existing memory bank and executes an elegant update logic:- If it is brand new knowledge, Add.

- If it conflicts with old facts (such as the project deadline adjustment mentioned above), the system will adopt the new fact while marking the old fact as "Outdated" but never deleting it.

- If the new fact supplements the old fact, the system will Merge them.

This "non-destructive update" mechanism is crucial. It preserves a complete "sense of history" for AI, making it understand that "we once planned to launch on August 1, but now changed to September 15, because...", thus avoiding memory confusion in future decisions.

Ultimately, it is this precise process of "Extract-Associate-Integrate" that builds a dynamic knowledge graph capable of self-purification and possessing a sense of history. This endows it with an extremely powerful "Super Memory" beyond the biological brain:

It can, like the human brain, forget irrelevant pixel-level details and only retain high-value, concept-level "Core Summaries" (such as "Project delayed due to supplier issues"); and like a machine, perfectly and losslessly backtrack to the full scene when that "Summary" was born through the "Memory Index" when needed (such as the complete recording and transcript of the review meeting).

This memory ability, combining "biological abstract wisdom" and "machine absolute precision," truly gives our silicon-based employees the extraordinary ability to transform raw data (Data) into structured information (Information), and then refine information into usable knowledge (Knowledge). When it faces a new task again, it is no longer an empty "genius," but will first retrieve its long-term memory and ask itself: "What successful experiences or failure lessons did I have regarding this task in the past?"

This is the truest portrayal of "learning from a mistake" in the digital world. It gives the agent the ability to learn and remember, laying an indispensable physiological foundation for the silicon-based employee's ultimate leap from "execution tool" to "evolutionary partner."

Of course, having the "brain" of memory is not enough. How to establish an effective feedback mechanism so that the agent can use this stored knowledge to guide future actions and form a true "evolutionary closed loop"? This is the PDCA cycle we will discuss in depth later in this chapter.

[^1]: Mem0 is an open-source universal memory layer designed for AI Agents. Its GitHub repository provides specific implementation code. Reference "mem0ai/mem0: Universal memory layer for AI Agents", GitHub. Project link: https://github.com/mem0ai/mem0 [^2]: Mem0's paper abstract concisely outlines its core idea, which is how to achieve scalable long-term memory while reducing Agent latency and cost through intelligent data structures and management strategies. Reference "Mem0: Building Production-Ready AI Agents with Scalable Long-Term Memory (Abstract)", arXiv. Paper abstract: https://arxiv.org/abs/2504.19413 [^3]: Mem0's full paper delves into its system architecture, performance benchmarks, and design philosophy in production-grade Agents, which is of high reference value for architects hoping to build advanced AI applications. Reference "Mem0: Building Production-Ready AI Agents with Scalable Long-Term Memory (HTML)", arXiv. Full paper: https://arxiv.org/html/2504.19413v1