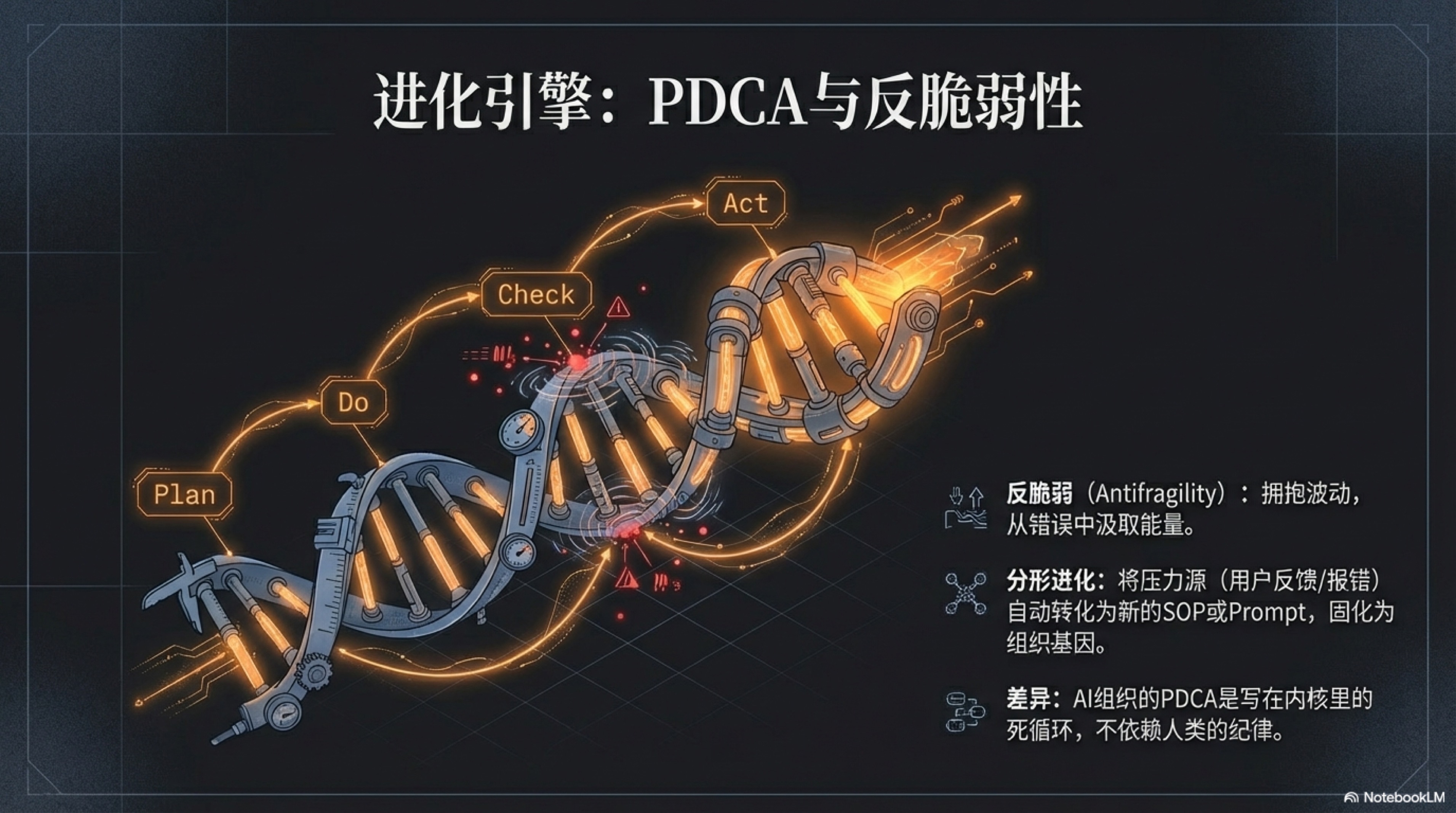

3.4 Evolutionary Engine: PDCA Cycle & Antifragility

When the heart starts beating (Initiative) and the brain starts remembering (Memory System), a digital life is truly born. But merely "living" is far from enough, especially in the brutal business world. Any life that cannot adapt to the environment and grow continuously is destined to be eliminated. Therefore, we must inject a limitless desire for growth derived from instinct into this newborn "silicon-based life." This is its evolutionary engine: the PDCA cycle.

Imagine you are a coffee shop owner who wants to improve business. PDCA is like your innate instinct for improvement, only given a more structured name. First, you Plan: "Latte sales seem poor recently. I think the problem might be the taste of the milk. I guess if I switch from skim milk to whole milk, customers will like it more." Next, you start to Do this small experiment: "OK, use whole milk for all lattes this week and see the effect." A week later, you start to Check the data: "A miracle happened! Latte sales increased by 30%, and several regular customers praised the richer taste." Finally, you take Act: "The effect is significant. Whole milk will be the official standard for our lattes from now on! Update the recipe manual immediately!" This is PDCA, a simple, universal, and powerful methodology for continuous improvement.

The modern form of this methodology was popularized by the legendary American management guru W. Edwards Deming. After World War II, he was invited to a ruined Japan and sublimated the "Plan-Do-Check" idea originally proposed by his mentor Walter Shewhart into a complete PDCA cycle, teaching it as a "gospel" to a generation of Japanese companies like Toyota and Sony. It helped Japanese manufacturing leap from a synonym for "cheap and inferior" to a global benchmark for quality, creating a post-war economic miracle.[^1]

However, such a powerful tool verified for nearly a century faces an eternal embarrassment in human organizations: Easier said than done. That coffee shop owner, after "Checking," might forget to update the recipe manual (Act) because he was too busy; or his next "improvement plan" was shelved indefinitely due to quarterly reports or employee turnover. In human management practice, the biggest enemy of the PDCA cycle is human limited attention, energy, and discipline. Review meetings turn into blame games, improvement items are listed but not followed up, and "continuous improvement" often tragically and quietly "breaks" after "Do."[^2]

But for silicon-based employees, all this will be completely overturned.

PDCA is no longer a "management philosophy" barely maintained by "corporate culture" and "executive will," but a core algorithm that can be ruthlessly, precisely, and tirelessly carved into its kernel. When the AI version of the coffee shop owner starts its work, the style becomes completely different:

It can design 100 improvement Plans for lattes in one second, from milk brands to coffee bean grind size, to precise water temperature control. Then, it Does multiple A/B tests simultaneously with an efficiency unattainable by humans. The most critical turning point occurs in the Check and Act stages: While humans are making excuses for "analyzing data is too troublesome," AI will condition-reflexively generate detailed quantitative reports after every task is completed, coldly analyzing the causal relationship between every variable and final sales. Immediately after, it completes the Act at the speed of code—not writing a "suggestion report" waiting for human approval, but directly solidifying the "whole milk plan" verified as optimal into its unshakeable new code of conduct instantly. And that failed "switch to oat milk" test will automatically become a new memory written into its knowledge base: "Warning: In user group A, using Oat-03 oat milk leads to a 15% drop in satisfaction."

Here, PDCA is no longer an intermittent hand-cranked engine, but a nuclear fusion reactor running 24/7 without interruption. Every cycle, whether successful or failed, will be thoroughly "digested and absorbed" by the system, becoming indispensable nutrients for the next evolution. What doesn't kill it really only makes it stronger.

And it is this tireless cycle that endows our AI-native enterprise with a trait that is beyond "powerful" and more awe-inspiring—Antifragility.

This term was proposed by thinker Nassim Nicholas Taleb in his book of the same name, "Antifragile." It describes a characteristic one dimension higher than the "Robustness" or "Resilience" we usually pursue.[^3] To truly understand its revolutionary nature, we must first clarify three core concepts: Fragile, Robust, and Antifragile.

Imagine three parcels you are preparing to send to different parts of the world:

In the first parcel is an exquisite Chinese Ming Dynasty porcelain cup. It represents "Fragile." You must plaster it with "Fragile" labels, wrap it tightly with countless layers of bubble wrap and fillers, and pray that the courier handles it gently throughout the journey. Because any unexpected impact, bump, or accidental drop could instantly turn it into a pile of irretrievable fragments. Fragile systems collapse in the face of uncertainty and stress. They hate volatility and crave eternal stability.

In the second parcel is a solid Stainless Steel Block. It represents "Robust." You hardly need any packaging; you can throw it directly into the transport truck. No matter what bumps, squeezes, or even drops it experiences during transport, when it arrives at the destination, it remains that stainless steel block, unscathed. Robust systems can resist impact and pressure, maintaining their form unchanged. They are indifferent to volatility and can withstand chaos, but chaos itself does not make them better. This is the realm most companies and individuals dream of—"Solid as a Rock."

Now, look at the third parcel. There is nothing inside, only a label with a name from ancient Greek mythology written on it: Hydra. This is a legendary nine-headed serpent. Whenever a hero cuts off one of its heads, not only does it not die, but two new, stronger heads grow in the original place. This mythical creature perfectly interprets "Antifragile." It is not just able to resist harm; it draws strength from harm, becoming stronger due to chaos and stress. Every blow you deal to it becomes fuel for its evolution. Antifragile systems love volatility, embrace uncertainty, and can even be said to actively "forage" for those "stressors" that are enough to kill fragile systems but nourish themselves.

Taleb hit the nail on the head by pointing out that many important systems in our lives are essentially antifragile. For example, the human immune system: a child who grows up in a sterile environment has far less immunity than a child who has been exposed to various bacteria and experienced a few minor colds in a natural environment. Every "attack" (stress) by a pathogen prompts the immune system to produce antibodies (learning), thereby becoming more composed when facing similar attacks next time. The principle of vaccines is to utilize this antifragility—by introducing a tiny, controllable "stressor" to stimulate the entire system to make a qualitative leap. Similarly, our muscles are also antifragile; the "tearing" (stress) of lifting barbells time and again ultimately brings stronger muscle fibers.

Now, let's re-examine the business world with this new cognitive framework. What is the ultimate goal of most traditional companies? To become that "Stainless Steel Block"—possessing stable cash flow, impregnable market share, and predictable growth curves. They invest heavily to establish complex processes, strict KPIs, and thick firewalls, with only one core purpose: Eliminate volatility, avoid making mistakes. In these companies, "mistake" is a dirty word, implying loss, accountability, and career stain. The entire organizational culture is systematically punishing mistakes and covering up mistakes.

And this is precisely the most fatal "Iatrogenics" (harm caused by the healer) warned by Taleb. To avoid all imaginable small mistakes, these organizations stifle all attempts and experiments, becoming incredibly rigid. They are like that "Thanksgiving Turkey" carefully fed for a thousand days, convinced every day that the owner loves it and life is stable and beautiful, until an unpredictable "Black Swan Event" (Thanksgiving) arrives, and everything instantly returns to zero. A system that refuses to learn from daily small mistakes is accumulating energy for a fatal, irretrievable collapse in the future. On the road to pursuing "Robustness," they personally crafted themselves into the most exquisite and expensive "Porcelain Cup."

So, what should an antifragile organization look like?

It must be an organism capable of internalizing "mistakes" and "stress" into fuel for evolution. Its core logic is no longer "how to avoid making mistakes," but "how to squeeze the maximum learning value from every mistake and make itself stronger."

This sounds familiar, doesn't it?

This is exactly the essence of the evolutionary engine driven by the PDCA cycle that we designed for AI-native enterprises.

When we combine the PDCA cycle with antifragility theory, an astonishing picture appears: the management tool that frequently failed in human organizations due to "lax discipline" has turned into a ruthless, efficient, and unceasing "Antifragility Machine" in the world of AI.

Let's re-examine this cycle, but this time, in Taleb's language:

Plan/Do - Proactively Introduce Controllable Stressors: AI's Plan/Do is no longer the protracted strategic planning of humans. It can be designing and launching 10 different versions (A/B/C/D... tests) for a webpage button color within a minute. Every tiny change is a low-cost "probe" or "provocation" initiated against the real world. It is actively and continuously manufacturing thousands of tiny, controllable "stressors" for itself. It is not passively waiting for market feedback, but "squeezing" market feedback with high-frequency experiments.

Check - Transform Stressors into Information: This is the core of the magic. Taleb emphasizes, "Stress and chaos provide information." For fragile systems, this information is "noise"; for antifragile systems, this information is "signal." When AI's countless experimental versions run in the real world, every user click, bounce, and purchase is precisely captured by this machine in the form of data. A button color change caused a 0.1% drop in click-through rate, a new title increased user stay time by 2 seconds... To humans, these are "data reports" requiring laborious analysis; to AI, these are the most direct and pure "signals of pain or pleasure." It clearly knows in which "probe" it got burned, and in which it was rewarded.

Act - Transform Information into Structural Strength: This is the final leap from "Robust" to "Antifragile." A robust system will "heal" back to its original state after being impacted. An antifragile system will "evolve" new functions. The "Act" stage of AI is the perfect embodiment of this evolution. When the "Check" stage confirms that an experiment (such as changing the buy button from green to orange) can bring a 20% increase in conversion rate, this "successful experience" will not just stay in a PPT report. In the next second, it will be automatically solidified as the system's new "standard" or "gene," permanently changing the behavior of this AI. More importantly, those failed experiments—those attempts where users "burned" it—are even more valuable. The system will automatically record: "Bug rendering caused by using #FF7F50 orange on iOS 19.5 system in dark mode." This record will immediately be transformed into a new "guardrail" rule, or become a high-weight negative sample in the training set. It's like the immune system leaving a "wanted order" (antibody) in the body forever after defeating a virus. AI is not "fixing" mistakes; it is "absorbing" mistakes and building the "corpses" of mistakes into stronger brick walls for its future castle.

So, what we are building is no longer a traditional company that hates volatility. A traditional company is like the captain of a large ship, whose lifelong learning is how to avoid storms and find calm routes. Our AI-native enterprise is a genetically modified deep-sea monster that feeds on "storms." Drastic changes in the market environment, the sudden death of competitors, the fickle migration of user preferences... these "Black Swans" that terrify traditional companies are gluttonous feasts in its eyes. Because its core cycle (PDCA) determines that the speed at which it benefits from chaos far exceeds the harm chaos can cause it.

It doesn't need to pray for market stability because it is a believer in chaos itself. It doesn't need to rely on the foresight of a genius founder because its "wisdom" comes from real data squeezed out of countless tiny failures every day, every second. It no longer pursues the vain myth of "never making mistakes," but embraces a deeper truth:

What doesn't kill me will eventually make me stronger. And it can make me stronger at a speed I cannot imagine.

[^1]: Regarding the origin of the Deming Cycle and its core role in Japanese quality management, reference the official materials of The W. Edwards Deming Institute. It details how Deming developed Shewhart's ideas into the PDCA cycle and had a profound impact on Japanese industry. Official website: https://deming.org/ [^2]: The classic interpretation of the four steps of PDCA is widely adopted as a standard definition by professional organizations such as the American Society for Quality (ASQ) and is a fundamental component of global quality management systems (such as ISO 9001). Reference link: https://asq.org/quality-resources/pdca-cycle [^3]: Taleb, N. N. (2012). Antifragile: Things That Gain from Disorder. Random House. This book details the concept of antifragility and demonstrates how systems benefit from uncertainty and randomness through examples in finance, biology, medicine, and other fields. Read online: https://archive.org/details/antifragilething0000tale_g3g4