4.4 Evaluation System: From KPI to Evals

"You can't manage what you can't measure." This ancient maxim of management has gained a brand new, arguably even harsher interpretation in the era of AI-native enterprises. When the "employees" we manage transform from carbon-based humans with rich emotions and diverse needs to silicon-based agents made of code and data, the traditional human resource performance management system is shaken to its foundations and collapses. We are experiencing a profound revolution from subjective, lagging, and vague KPIs (Key Performance Indicators) to objective, real-time, and precise Evals (Evaluation Sets). This is not just an iteration of evaluation tools, but a generational leap in management philosophy.

The Death of KPI: An Inevitable Farewell

The traditional KPI system is essentially a complex contract designed in the industrial age and the early information age to manage and motivate human employees. It attempts to quantify human contribution through a series of indicators, but the underlying logic of its design is incompatible with the mode of existence of AI agents. You cannot assess an AI's "work attitude" because its "attitude" is always correct and absolutely obedient; you cannot measure its value by "attendance rate" because it is online 7x24 hours and never tires; you certainly cannot evaluate its "teamwork spirit" because its collaboration mode is strictly defined by code and protocols, without office politics or communication barriers in human society.

These indicators, which once occupied a core position in management, became absurd overnight. The root cause is that a large part of KPI is to solve the "Principal-Agent Problem"—to ensure that the goals of employees (agents) are consistent with the goals of the company (principal). It constrains and guides employee behavior through process indicators such as attendance, attitude, and collaboration, because the final "result" is often affected by too many uncontrollable factors and is difficult to attribute precisely. Managers hope to indirectly lead to "expected results" by assessing "correct behaviors."

However, AI employees do not have a "Principal-Agent Problem." It has no selfishness, desire, or career plan. Its only goal is the goal defined by its Objective Function. It does not need to be motivated, only clearly instructed. Managing its process is meaningless because its "process" is the execution of code, which is completely transparent and traceable. Therefore, the evaluation of AI must be, and can only be, purely result-oriented. The value of an AI Agent lies not in "how hard it looks," but in whether the results it delivers are precise and efficient, and whether it brings sufficient return (ROI) for the computing resources (Token costs) invested in it. This farewell is inevitable because the "humanity" foundation supporting the KPI building has been completely hollowed out by the probability, certainty, and infinite computing power of AI. The map of the old world cannot find any navigable coordinates on the new continent.

Three-Dimensional Evals System: A Digital Ruler for Silicon-based Employees

Bidding farewell to KPI, we need a brand new measurement system tailored for AI—the Evals system. It is no longer a quarterly or annual performance review, but an automated assessment engineering that is embedded in every corner of the system and occurs at high frequency. A comprehensive agent evaluation system must unfold from three dimensions, building a three-dimensional, dynamic quality coordinate system to ensure that this silicon-based legion runs on a precise, efficient, and stable track.

1. Hard Metrics: The Cornerstone of Efficiency and Accuracy

These are the "load-bearing walls" of the evaluation system, the binary standards defining "right and wrong," allowing no ambiguity. They are objective facts that can be precisely judged by programs, black or white.

- Pass Rate: This is the most core fundamental. For example, did the code produced by an Agent responsible for code generation pass the preset unit tests? Does the JSON file generated by an Agent responsible for data extraction completely conform to the predefined Schema structure? This is the minimum program for "job completion," and any success rate below 100% means process interruption and resource waste.

- Latency & Cost: In the world of AI, time is money, and Token is budget. How much computing time was consumed to complete a task? How many Tokens were called? These indicators directly determine the ROI of the business. An Agent that can complete the task but at a high cost may be unsustainable commercially. Economic constraints are "digital reins" forcing Agents to find optimal solutions and prevent cost loss.

- Tool Usage Accuracy: In complex Agent systems, AI needs to interact with various external tools (APIs, databases). Did it call the right tool at the right time? Were the parameters passed accurate? Incorrect tool calls will not only lead to task failure but may also trigger catastrophic downstream effects.

2. Soft Metrics: The Soul of Quality and Style

Hard metrics define the bottom line of "qualified," while soft metrics pursue the upper limit of "excellence." These metrics often involve subjective judgment, but with the assistance of AI, they can also be quantified on a large scale. They are the guardians of brand voice, user experience, and content quality.

- Tone, Style & Brand Voice: Is the reply of a customer service Agent representing the company interacting with users stiff and cold or warm and friendly? Is its language style professional and rigorous or lively and interesting? This directly relates to user experience and brand image. In the field of content creation, does the article generated by the Agent continue the creator's consistent writing style and core viewpoints? Soft metrics ensure that AI does not lose its due "personality" or "tone" while producing on a large scale.

- Hallucination Rate & Quality: This is an inherent risk of large language models. Did the Agent "fabricate" non-existent facts in the answer? For a system relying on RAG (Retrieval-Augmented Generation), how high is the "fidelity" of its answer to the original knowledge base? Does the content have depth and insight, rather than just a pile of facts? The evaluation of these quality dimensions prevents AI from becoming a high-yield "Spam Manufacturing Machine."

3. Dynamic Metrics: Evolutionary Pressure Against Entropy Increase

This is a dimension that is often overlooked but crucial. It measures the stability and adaptability of the agent in the river of time, ensuring that the system does not quietly rot without supervision.

- Model Drift: As a service, the underlying model of large language models will be constantly updated and iterated. This may cause the performance of prompts that once performed well to suddenly drop under the new version of the model. Dynamic metrics ensure that the Agent's performance remains consistent during model updates by establishing a fixed "Golden Standard" evaluation set (Golden Dataset) and conducting regular regression testing. This is equivalent to the monitoring mechanism for "job burnout" or "state decline" set up for human employees.

- Robustness: The system needs to face the malice of the external world. When encountering malicious Prompt Injection attacks or unexpected input formats, can the Agent maintain the stability and safety of its behavior? This measures the "immunity" of the system, ensuring it can operate steadily in a real, complex environment.

This three-dimensional Evals system together constitutes a dynamic, multi-level evaluation network. It digitizes, real-time-izes, and transparent-izes the performance of AI, providing a solid data foundation for the revolutionary evaluation method we will discuss next—"Silicon-based Jury."

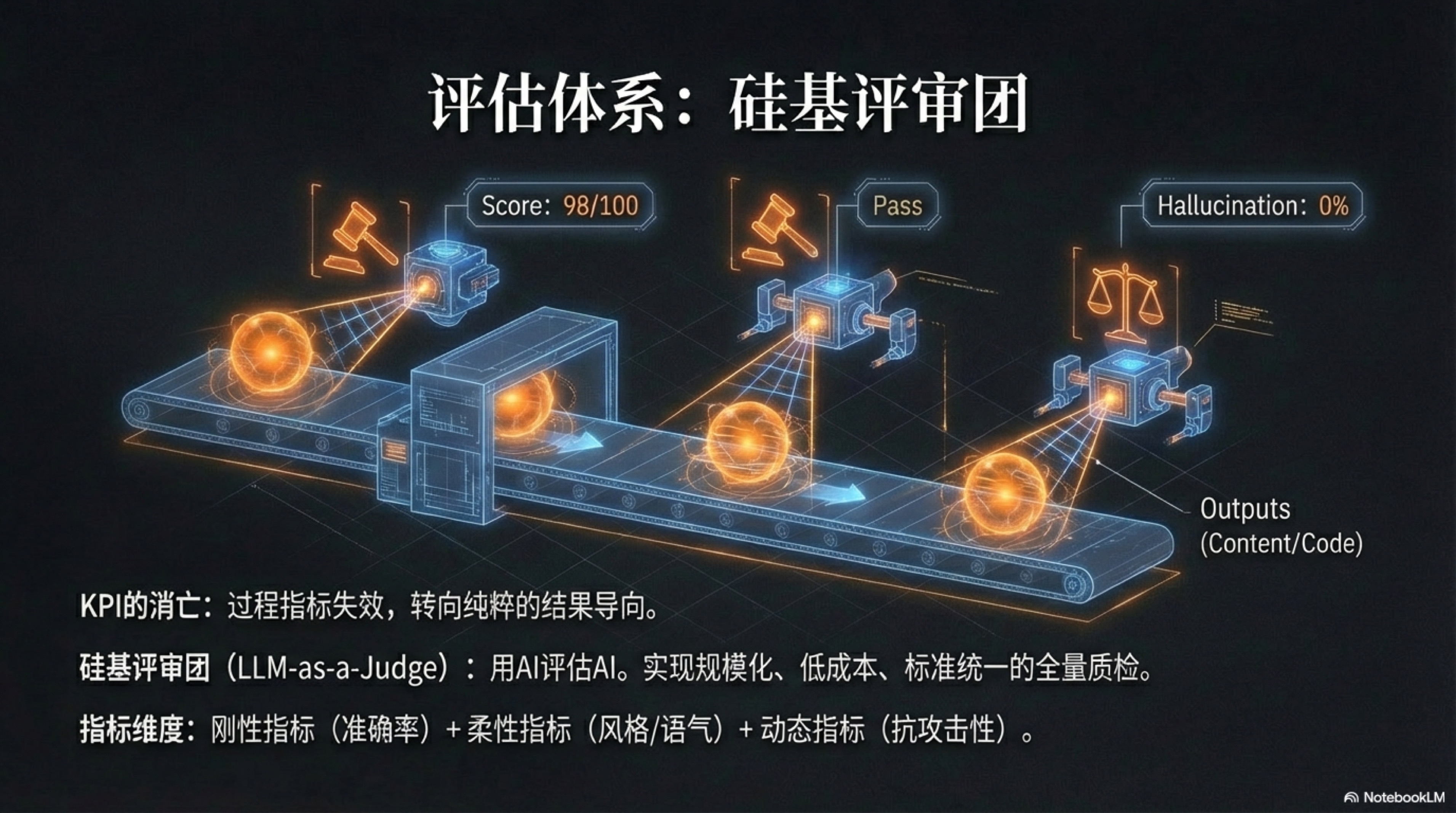

Silicon-based Jury (LLM-as-a-Judge): The Only Solution for Scalable Quality Control

How to evaluate the above Evals on a large scale, at low cost, and with high efficiency, especially those "Soft Metrics" with strong subjectivity? Are we going to hire thousands of human reviewers to correct billions of assignments generated by AI? This is obviously a paradox. The answer is to beat magic with magic—use AI to evaluate AI. This is the core concept of "Silicon-based Jury" (LLM-as-a-Judge), which is not only a technology but also a disruptive management paradigm.[^1]

Imagine such a scenario: In a fully automated content factory, a huge team of human editors was needed in the past to review manuscripts. Editor-in-Chief A might prefer a concise writing style, Deputy Editor B appreciates flowery language, and Intern C is particularly sensitive to grammatical errors. Their standards vary, their states fluctuate, and the quality of review when drowsy at 3 PM cannot be compared with that when energetic at 10 AM. Now, this editorial department is replaced by a "Silicon-based Jury." This jury consists of five AI Agents with different "personalities":

- "Conservative Judge": It strictly checks whether the article has factual errors and whether the data sources are reliable based on "Hard Metrics," maintaining the highest vigilance against any statements that may cause controversy.

- "Radical Creative Officer": It focuses on evaluating the "Soft Metrics" of the content, judging whether the viewpoint is novel, the angle is unique, and whether it can trigger reader thinking and discussion.

- "Picky Grammarian": It checks grammar, punctuation, and style consistency word by word to ensure the professionalism and readability of the text.

- "Brand Guardian": It evaluates whether the tone and values of the article are completely consistent with the preset Brand Voice against the "Constitution."

- "User Empathizer": It simulates the perspective of target readers, evaluates whether the article is easy to understand and resonates, and predicts its possible market reaction.

When a manuscript generated by the "Writer Agent" is completed, it is instantly distributed to this jury. Within a second, five "judges" review from their respective dimensions simultaneously and output structured scores and revision opinions. For example: "Conservative Judge" points out that the data cited in the third paragraph is outdated; "Radical Creative Officer" thinks the title is not attractive enough; "Brand Guardian" suggests replacing a certain overly colloquial word. These feedbacks are aggregated to form a comprehensive, objective, and highly consistent review report.[^2]

The superiority of this mode is overwhelming:

- Scale and Speed: Human reviewers might review dozens of articles a day, while the Silicon-based Jury can complete high-quality evaluations of thousands of outputs in a second, completely breaking the bottleneck of quality control.

- Consistency and Objectivity: AI has no emotions, no fatigue, and no bias. As long as the "Judge Prompt" remains unchanged, its judgment standard will always be unified, completely eliminating the huge variance caused by subjective factors in human evaluation.[^3] This repeatability is the prerequisite for realizing scientific and engineering management.

- Cost-effectiveness: Compared with the cost of hiring a large number of human experts, the cost of calling LLM API for evaluation is almost negligible. This makes "Full Quality Inspection" change from a luxurious ideal to a cheap reality.

The emergence of the Silicon-based Jury marks a profound alienation of management functions. The role of the manager (or one-person entrepreneur) transforms from a concrete "Reviewer" or "Quality Inspector" to an "Evaluation System Designer" and "Judge Prompt Writer." Your job is no longer to judge every specific result, but to define the standard of "Good" and codify and automate it. This is the ultimate embodiment of the transformation from KPI to Evals: turning quality control itself into a piece of code that can be version-managed, continuously iterated, and infinitely expanded. In the AI-native world, this is the only path to scalable excellence.[^4]

[^1]: This article clearly explains why "LLM-as-a-Judge" is considered the best LLM evaluation method currently. Its core advantages lie in unparalleled scalability, consistency, and cost-effectiveness. Reference Confident AI Blog, "LLM-as-a-Judge Simply Explained", Confident AI, 2023. Article Link: https://www.confident-ai.com/blog/why-llm-as-a-judge-is-the-best-llm-evaluation-method. [^2]: Besides theory, specific engineering implementation is also crucial. This tutorial provides a comprehensive practical guide, showing how to build and use "LLM-as-a-Judge" step by step to evaluate the output quality of agents. Reference Juan C. Olamendy, "Using LLM-as-a-Judge to Evaluate Agent Outputs: A Comprehensive Tutorial", Medium, 2023. Article Link: https://medium.com/@juanc.olamendy/using-llm-as-a-judge-to-evaluate-agent-outputs-a-comprehensive-tutorial-00b6f1f356cc. [^3]: A key application scenario of "Silicon-based Jury" is evaluating code quality. This article specifically discusses how to utilize LLM as a "judge" to evaluate code generated by other LLMs, which is a highly specialized and hugely valuable field. Reference Cahit Barkin Ozer, "Utilising LLM-as-a-Judge to Evaluate LLM-Generated Code", Softtechas on Medium, 2024. Article Link: https://medium.com/softtechas/utilising-llm-as-a-judge-to-evaluate-llm-generated-code-451e9631c713. [^4]: Any evaluation system has its limitations, and "Silicon-based Jury" is no exception. This article deeply studies various biases that may exist when LLM acts as a "judge," such as preferring longer answers (verbosity bias) or answers in earlier positions (position bias), which is crucial for us to design a fairer and more robust evaluation system. Reference Wenxiang Jiao & Jen-tse Huang, "LLMs as Judges: Measuring Bias, Hinting Effects, and Tier Preferences", Google Developer Experts on Medium, 2024. Article Link: https://medium.com/google-developer-experts/llms-as-judges-measuring-bias-hinting-effects-and-tier-preferences-8096a9114433.